Chapter 24

What is Entropy?

Entropy is a complex and abstract concept, yet it is incredibly powerful. One of its commonest definitions or meanings is that it is a measure of disorder or randomness. There are several other mathematical or statistical definitions, but we need not discuss them here. It is well accepted though that as the universe ages entropy increases overall, but there are exceptions to this rule. One of the commonest examples we know of is that of life, for life always tends to make organised systems out of the surrounding chaos. Ultimately however, life is trivial and only local in the vast scheme of the universe.

Many scientists predict that all order and design will eventually be over-ruled, as the universe submits to its ultimate state, which has been termed “Heat Death”. This happens when all things are finally separated and there is no energy left to change anything. Entropy will then be at a maximum.

A common question is: does energy influence entropy and what is their relationship?

We could say that entropy is more. More movement, more disorder, more randomness in structure. But does energy fuel that state of change? It seems likely that it does, for energy is used to separate the particles. And this energy can run down. Eventually.

There is an equation for entropy, and this involves the number of possible configurations available. More states means more entropy. But can entropy be stored? Creating a kind of potential entropy.

This might be useful.

The Origin of Everything

(Online Edition)

-

![]()

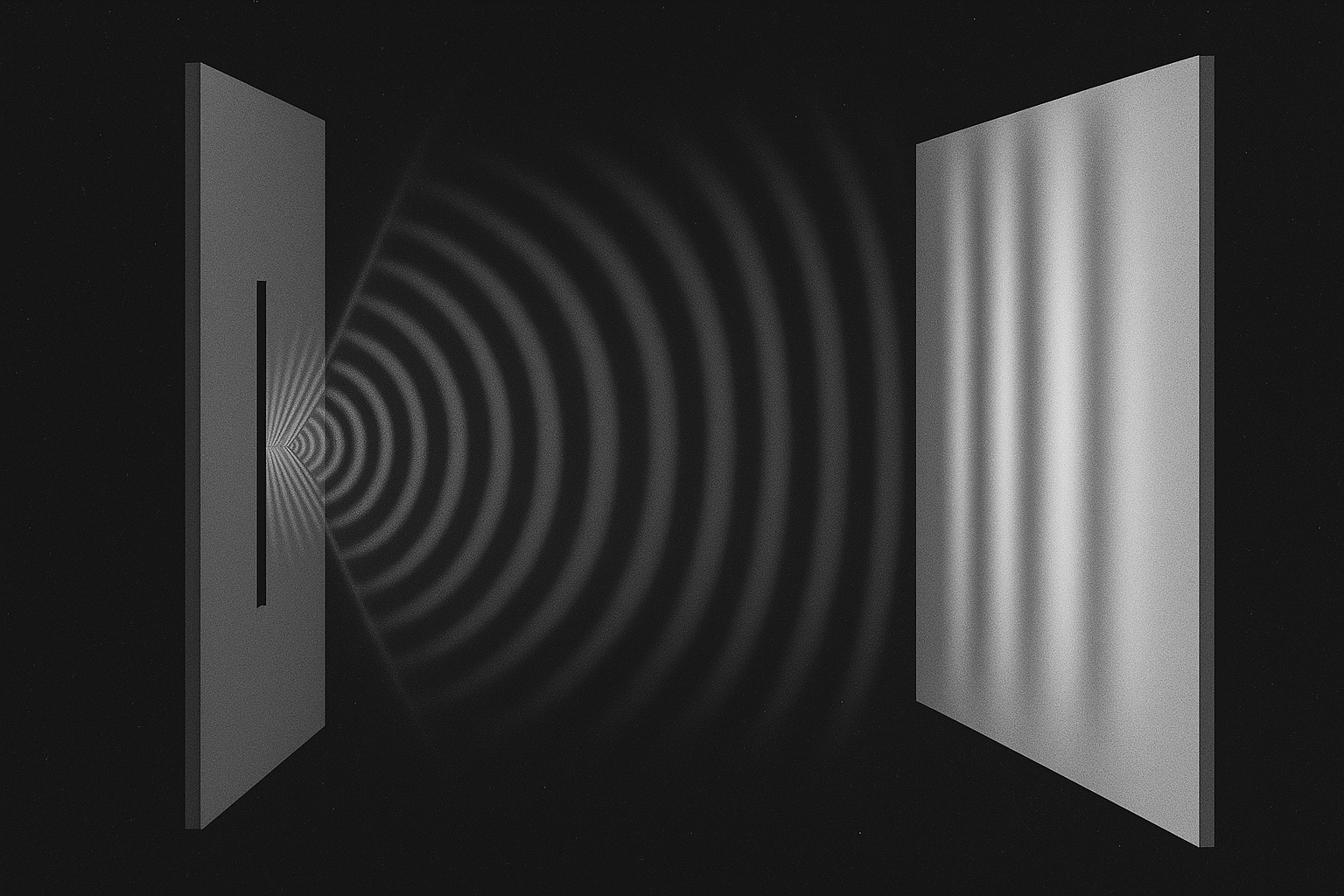

25 - SED and Schrödinger’s Equations

Schrödinger’s equation describes how quantum systems evolve, but SED reveals the physical origin behind the maths: spin. Matter waves aren’t abstract and purely probabilistic - they emerge from the rotational motion of energy in soliton structures. Spin, encoded in Planck’s constant, is the heartbeat of matter itself. This chapter bridges wave mechanics and real structure, grounding quantum behaviour in electromagnetic motion.

-

![]()

26 - What is a Neutrino?

Neutrinos are the ghost particles of the universe - abundant, fast, and almost invisible to interaction. SED suggests they are oversized, low energy rotons with hidden structure and a single unchanging spin. Their mysterious ability to morph between three types may be tied to their orientation through the three dimensions of space. Could their elusive nature be due to an undetectable charge and giant size? This chapter explores the particle that slips through almost everything, including our understanding.

-

![]()

27 - What is a Wave and What is Waving?

Are the waves in quantum physics real, or just mathematical ghosts? SED argues they’re electromagnetic through and through - real, measurable, and the very essence of both matter and energy. This chapter challenges the Copenhagen view, rooting wave-particle duality in structured energy, not abstract probability. What if potential is more physical than we could imagine?

-

![]()

28 - Why Gravitational Mass Equals Inertial Mass

What makes mass resist changes in motion? And what makes it attract other mass? This chapter explores the link between inertial and gravitational mass, proposing they stem from the same origin: space compression during matter formation. Using the SED framework, it offers a tangible geometric explanation for inertia and gravity, and hints at the futuristic possibility of anti-gravity. Could mastering the geometry of space lead to revolutionary new motion?

-

![]()

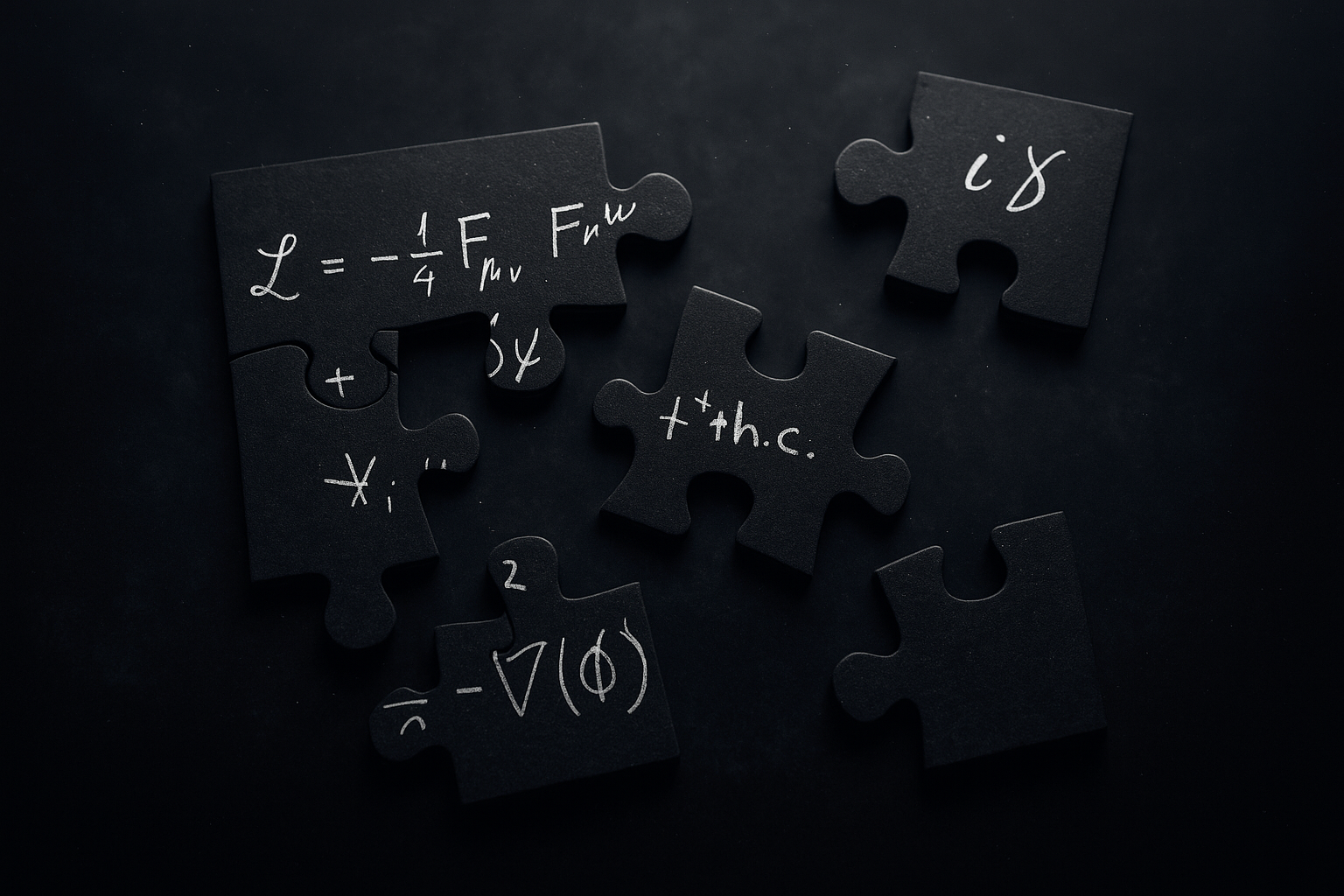

29 - Energy Is Not Quantised

Is energy truly quantised? This chapter challenges a core quantum assumption, proposing that while Planck’s constant (h) is undoubtedly quantised, energy itself flows as a continuum - its apparent granularity an illusion shaped by the fixed frequency of the particle that carries it. Photons may arrive in discrete packets, but the underlying amount of energy can in theory take on any value. What determines a particle’s mass or energy? The search for deeper understanding continues.

-

![]()

30 - Conclusion

A simpler and more complete physics is offered based on structure. From insights on the formation of matter, gravity, and the fine structure constant to a clearer understanding of how the universe works. A theory of everything has been proposed. The physics community will ultimately be the judge. Where to from here?

-

![]()

31 - Further Studies

These are the areas where further investigation is needed to complete the theories in The Origin of Everything.

-

![]()

References

Interested in further reading? These are the previous authors and books that helped to form the ideas in The Origin of Everything.

-

![]()

Foreword

Before diving into the main content, read here about the motivation behind the book and the big questions it seeks to answer. See who inspired the work, and the thinking that led to this theory, and why it might just change the way we understand the universe.

-

![]()

01 - Introduction

Modern physics often buries insight under complexity. The theory outlined in The Origin of Everything offers a return to simplicity and a new vision of how the universe is built. Written for curious minds, it presents a bold, unified theory of everything - grounded in known physics, inspired by new insight.

-

![]()

02 - Structural Electrodynamics (SED)

Here we meet the core concept of the book - Structural Electrodynamics (SED): matter is made from energy loops, not point particles. These loops, called rotons, are electromagnetic structures that trap energy in a spinning, three-dimensional wave. SED offers a unified framework that can explain particles, forces, and even gravity from first principles.

-

![]()

03 - Matter and Energy

This chapter redefines the boundary between energy and matter. Energy flows in flat, fast circular waves, but when curled into a tight three-dimensional loop, it becomes matter. Using this model, we see that light and matter aren’t opposites but two states of the same underlying field.

-

![]()

04 - Quantum is Not That Strange

Quantum physics seems weird only when we treat it as separate from classical physics. This chapter shows that if you accept that all particles are waves with structure and spin, the quantum world starts to make intuitive sense. Wave-particle duality and other “mysteries” are natural outcomes of field behaviour.

-

![]()

05 - Angular Momentum & Planck’s Constant h

Spin is not a quirky quantum property. It's the foundation of all matter and physical action. Planck’s constant defines the smallest unit of angular momentum, making it the heartbeat of the universe’s structure. Everything spins, and spin defines everything.

-

![]()

06 - The Uncertainty Principle

The Uncertainty Principle isn’t about fuzziness or confusion. It’s about the deep interconnection between motion and measurement. You can’t perfectly know a particle’s position and momentum at the same time because they're different components of the same process - spin. This chapter reframes uncertainty as a natural limit of structured fields.

-

![]()

07 - What are Fields?

Fields are more than mathematical abstractions. They are the basis of all of the physical universe. And there is only one type - Electromagnetic. This chapter lays the foundation for understanding how all energy, matter, and forces emerge from field dynamics.

-

![]()

08 - Charge and Magnetism

What if charge and magnetism aren’t fundamental properties but consequences of something deeper? Here we explore how electromagnetic fields give rise to electric charge and magnetism, opposing the current school of thought. It is the elegant interplay of these dynamic fields within the roton that create all of the forces in nature.

-

![]()

09 - The Electron

In this chapter, the structure of the electron is revealed. Made from two gamma-ray photons colliding at right angles, their energy locks into a spinning loop called a roton (or rotating photon) - a stable, three-dimensional wave. This motion gives rise to mass, charge, and spin, with no need for point particles. The electron is light, folded and locked into a soliton.

-

![]()

10 - The Atom

This chapter shows how protons, neutrons, and electrons form from light itself, held together purely by the electromagnetic force—no need for an artificial “strong” or “weak” force. Through Structural Electrodynamics, we uncover the physical structure behind the atom, the nucleus, fusion, and the stability of matter itself.

-

![]()

11 - The Universe

From the subatomic to the cosmic, SED extends its insights to the vast structure and evolution of the universe. This chapter explores how time, matter, and space emerged through waves of creation, annihilation, and expansion - guided by electromagnetic fields. A fresh explanation for inflation, antimatter asymmetry, and mass reveals the universe as a dynamic interplay of field-based phenomena.

-

![]()

12 - What is Gravity?

Gravity isn’t a force - it’s space itself compressed due to the formation of matter. This chapter redefines gravity as a geometric effect caused by the 3D twisting of EM fields, revealing the true link between mass, spin, and space-time. SED offers a physical explanation Einstein never had, uncovering why gravity is weak, always attractive, and utterly fundamental.

-

![]()

13 - Dark Energy, Dark Matter, and Black Holes

SED suggests that even the darkest aspects of the cosmos (black holes, dark matter, and dark energy) have one thing in common - light or EM fields. From accelerating expansion powered by ongoing annihilation, to invisible rotons that may comprise dark matter, this chapter offers field-based explanations where standard models fall silent. It even draws a provocative link between black holes and fundamental particles through the fine structure constant, hinting at a cosmic symmetry.

-

![]()

14 - SED and Maxwell’s Equations

Maxwell’s equations are the foundation of electromagnetism, and SED brings them to life by modelling particles as dynamic, twisting field structures called rotons. Within this framework, electric charge emerges from divergence, mass arises from curl, and the dance between E and B fields sustains both light and matter. The roton isn’t just compatible with Maxwell’s theory - it’s a vivid physical manifestation of it.

-

![]()

15 - The Fine Structure Constant

Since its discovery in 1916 by Sommerfield, the Fine-Structure Constant, α, has long been a mystery, known precisely but not understood. SED proposes that α represents the ratio between the width of a particle’s electromagnetic path and its overall wavelength, giving physical meaning to this dimensionless number. In this model, particles are structured as twisting electromagnetic fields (rotons), and only a specific internal twist, due to α, can sustain their stable motion. Thus, α emerges naturally from the geometry of light trapped in matter.

-

![]()

16 - SED and Spin

An eternal twist woven into the fabric of every particle. SED reveals that this motion isn’t abstract or symbolic, but real angular momentum born from the endless dance of light itself. Through the geometry of rotons, matter inherits its spin, mass, and dual magnetic states. What quantum theory once shrouded in mystery, SED brings into view with clarity and simplicity.

-

![]()

17 - Problems with the Standard Model

The Standard Model claims to explain the fundamental particles and forces of nature - but SED challenges its complexity, contradictions, and reliance on unverified entities like quarks, gluons, Higgs Bosons, and isospin. What if mass, charge, and spin emerge from elegant field structures instead of patchwork theories? This chapter invites you to question the orthodoxy and discover a simpler, more unified picture of the universe.

-

![]()

18 - Unification of the Four Forces

Imagine if all the forces of nature - gravity, electromagnetism, the strong and weak nuclear forces - were just different expressions of a single phenomenon? This chapter presents SED’s radical proposal: that electromagnetic fields alone are responsible for every interaction in the universe. From the binding of atomic nuclei to the warping of space-time, SED reveals how geometry, spin, and field dynamics replace the existing concept of separate forces. It’s an elegant unification, long sought by physics and perhaps, finally within reach.

-

![]()

19 - What are de Broglie Waves?

Last century de Broglie’s bold idea that all matter behaves like a wave helped spark the quantum revolution. SED brings it vividly to life. By connecting wave-particle duality to real spinning fields, this chapter reimagines de Broglie waves not as abstract probabilities, but as a tangible, physical consequence of dynamic fields. Discover how the dance of spin, wavelength, and momentum shapes both the quantum and the cosmic.

-

![]()

20 - What is a Spinor?

Spinors are essential to modern physics, yet their physical structure has remained obscure for over a century. This chapter explores a new perspective from SED that connects spinors with the structure of matter in a clear and testable way. It shows how their strange properties (like requiring a 720° rotation to return to their original state) can be understood simply by virtue of the roton, as real features of the particles they describe. The result is a model that links the previously abstract spinor to the behaviour of the electron, revealing them as one and the same.

-

![]()

21 - SED and Polarisation

A sine wave is circular motion viewed side-on. Fundamentally light is always spinning. This chapter explores how the orientation of that spin gives rise to different types of polarisation: vertical, horizontal, and circular. SED reveals how aligned spins in photons unlock phenomena from sunglasses to 3D cinema. Discover how polarisation offers a window into the deeper structure of light itself.

-

![]()

22 - What is a Measurement?

What exactly happens when we measure something? In classical science, measurements were thought to simply reveal fixed properties - but quantum physics reveals a deeper truth: the act of measuring can influence the system itself. This chapter explores how observation collapses possibilities into outcomes, and how uncertainty sets limits on what we can ever truly know. A fascinating look at the fine line between potential and reality.

-

![]()

23 - What is an Observer?

What does it really mean to observe something in physics and why does it matter? This chapter explores the observer’s role in transforming possibilities into actual events, especially at the quantum scale. From photons emitted one by one to the mysterious collapse of the wave function, it challenges the idea that observation is passive. Is the observer merely watching, or are they fundamentally shaping what unfolds?

-

![]()

24 - What is Entropy?

Often described as a measure of disorder, entropy is a slippery but fundamental concept shaping our understanding of the universe's fate. From the quiet structure of living systems to the chaotic drift toward cosmic “heat death,” this chapter explores entropy as both a mathematical idea and a deep physical truth. Is energy the driver of disorder? Can entropy itself be stored? Is there such a thing as potential entropy? These provocative questions are explored further.